This is a 5 part series on why GPU clusters are under-utilized, how to measure utilization and how to improve it.

Part 1 - Why is your GPU cluster idle

Part 2 - How to measure your GPU utilization

Part 3 - How to fix your GPU utilization

Part 4 - GPU security and Isolation

Part 5 - Tips for optimizing GPU utilization in Kubernetes

Tips for optimizing GPU utilization in Kubernetes

Optimizing GPU utilization in Kubernetes requires a systematic approach that addresses monitoring, optimization, and governance simultaneously.

Assessment and Baseline Establishment

Current state analysis should focus on:

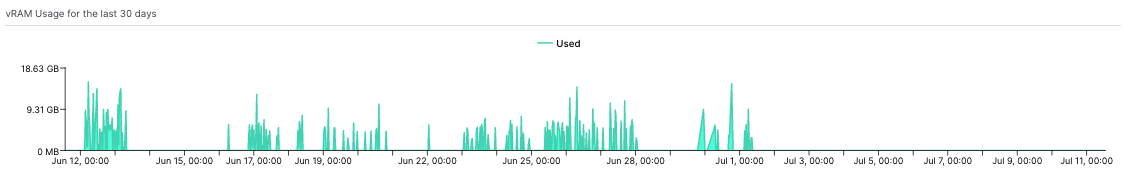

- Measuring actual GPU utilization across different workload types

- Identifying the most underutilized resources and workloads

- Calculating current costs and waste patterns

- Understanding team usage patterns and requirements

Baseline metrics should include:

- Average GPU utilization by workload type

- Cost per GPU-hour by team and project

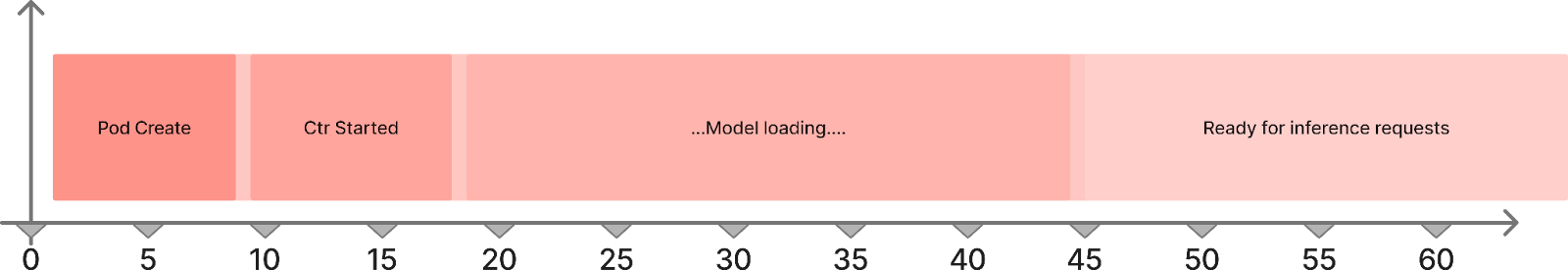

- Frequency and duration of cold starts

- Resource sharing opportunities and constraints

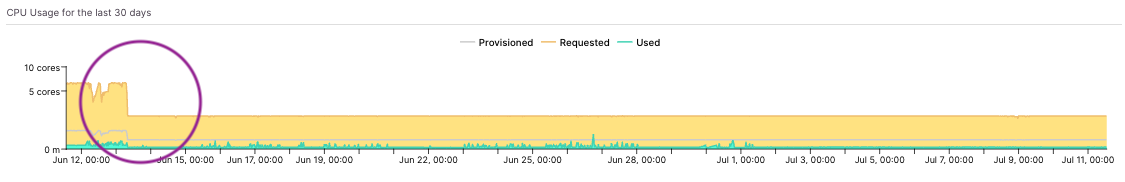

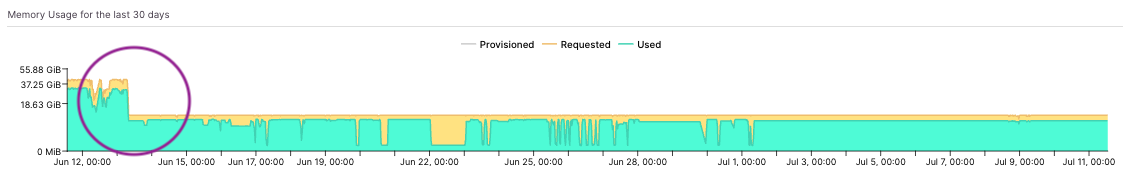

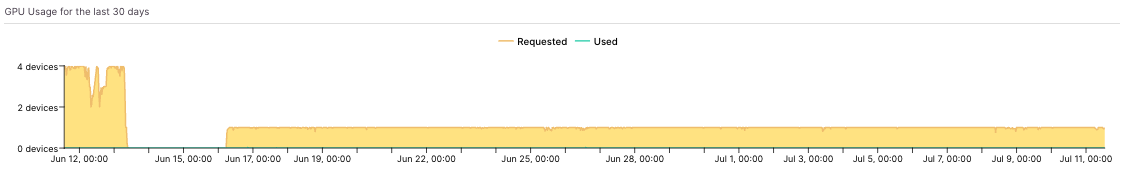

The following section provides a brief walkthrough on how overprovision/underutilization can be examined, and then automation applied to maintain workload efficiency.

Optimization Prioritization

High-impact optimization opportunities typically include:

- Research workflows with long idle periods

- Inference workloads with frequent cold starts

- Training workloads running on on-demand instances without checkpointing

- Underutilized dedicated GPU nodes

Quick wins that provide immediate ROI:

- Implementing basic monitoring and alerting

- Right-sizing obviously overprovisioned workloads

- Enabling spot instances for training workloads

- Consolidating underutilized resources

Governance and Continuous Improvement

Resource governance frameworks should include:

- Approval processes for GPU resource allocation

- Regular usage reviews and optimization assessments

- Cost allocation and chargeback mechanisms

- Training and best practices for development teams

Continuous improvement processes should focus on:

- Regular monitoring and optimization reviews

- Technology adoption (checkpoint/restore, MIG, etc.)

- Workload pattern analysis and optimization

- Cost efficiency benchmarking and targets

Conclusion: The Path to GPU Efficiency

GPU underutilization in Kubernetes represents one of the most expensive infrastructure optimization opportunities in modern cloud environments. Unlike CPU and memory optimization, which might save thousands monthly, GPU optimization typically saves tens or hundreds of thousands of dollars while improving application performance and reliability.

The path to GPU efficiency requires understanding the unique characteristics of different ML workload types, implementing comprehensive monitoring beyond basic utilization metrics, and adopting workload-specific optimization strategies. Technologies like checkpoint/restore and CRIU-GPU are transforming the economics of GPU infrastructure by enabling more aggressive use of cost-effective compute options while maintaining reliability.

Organizations that take a strategic approach to GPU optimization—focusing on workload-specific strategies, comprehensive monitoring, and systematic governance—typically achieve cost reductions of 40-70% while improving application performance and developer productivity. The key is treating GPU optimization as a strategic initiative rather than a tactical cost-cutting exercise.

As AI/ML workloads continue to grow in importance and scale, GPU efficiency will become a critical competitive advantage. Organizations that master these optimization strategies today will be better positioned to scale their AI infrastructure cost-effectively tomorrow.

Join this upcoming workshop with NVIDIA to learn more about GPU utilization for Kubernetes. Register here.