Kubernetes has revolutionized application deployment and management, but it's also introduced a massive cost problem. According to the Datadog’s State of the Cloud Costs 2025 report, the average CPU utilization is 18%. We’ve observed 12%-18% average utilization across dozens of Kubernetes clusters and multiple enterprises. This represents billions of dollars in wasted cloud infrastructure costs annually.

Nearly half of organizations see their spending increase after adopting Kubernetes. Yet no other platform matches its unified framework for networking, storage, and process configuration at scale. The question isn't whether to use Kubernetes—it's how to use it efficiently.

This comprehensive guide will show you exactly how to reduce your Kubernetes costs by 40-80% without sacrificing performance or reliability.

Table of Contents

- Understanding the Kubernetes Cost Problem

- The Root Causes of Kubernetes Waste

- Which Workloads Waste the Most Resources

- Quick Wins: Immediate Cost Reduction Strategies

- Advanced Optimization Techniques

- Autoscaling Best Practices

- Cloud-Specific Optimization (AWS EKS, Azure AKS, Google GKE)

- Implementing Continuous Cost Optimization

- Measuring Success

Understanding the Kubernetes Cost Problem

Why Kubernetes Wasn't Built for Efficiency

Kubernetes was inspired by Google's Borg, an internal cluster management system that powered billions of container workloads with near-perfect efficiency through tightly controlled, predictive algorithms. When parts of Borg were open-sourced and rebranded as Kubernetes, much of this optimization logic was left behind.

What emerged is a flexible, API-driven system that prioritizes developer experience and resilience over resource efficiency. Kubernetes makes it easy to provision resources but difficult to optimize them, leading to systematic overprovisioning at scale.

The Magnitude of Waste

Industry benchmarks reveal the scope of the problem:

- Average cluster utilization: Across dozens of customers we work with - 12%-20% for compute. 18%-32% for Memory

- Typical overprovisioning factor: 2-5x actual resource needs

- Annual waste per cluster: $50,000-$500,000 depending on cluster size

- Time to optimization payback: Usually 30-90 days

Why Traditional Monitoring Misses the Problem

Most monitoring focuses on pod-level health metrics, but overprovisioning happens at the resource request/limit level. A pod might be "healthy" while consuming only 20% of its allocated resources—the other 80% is wasted capacity that could run other workloads but remains unusable.

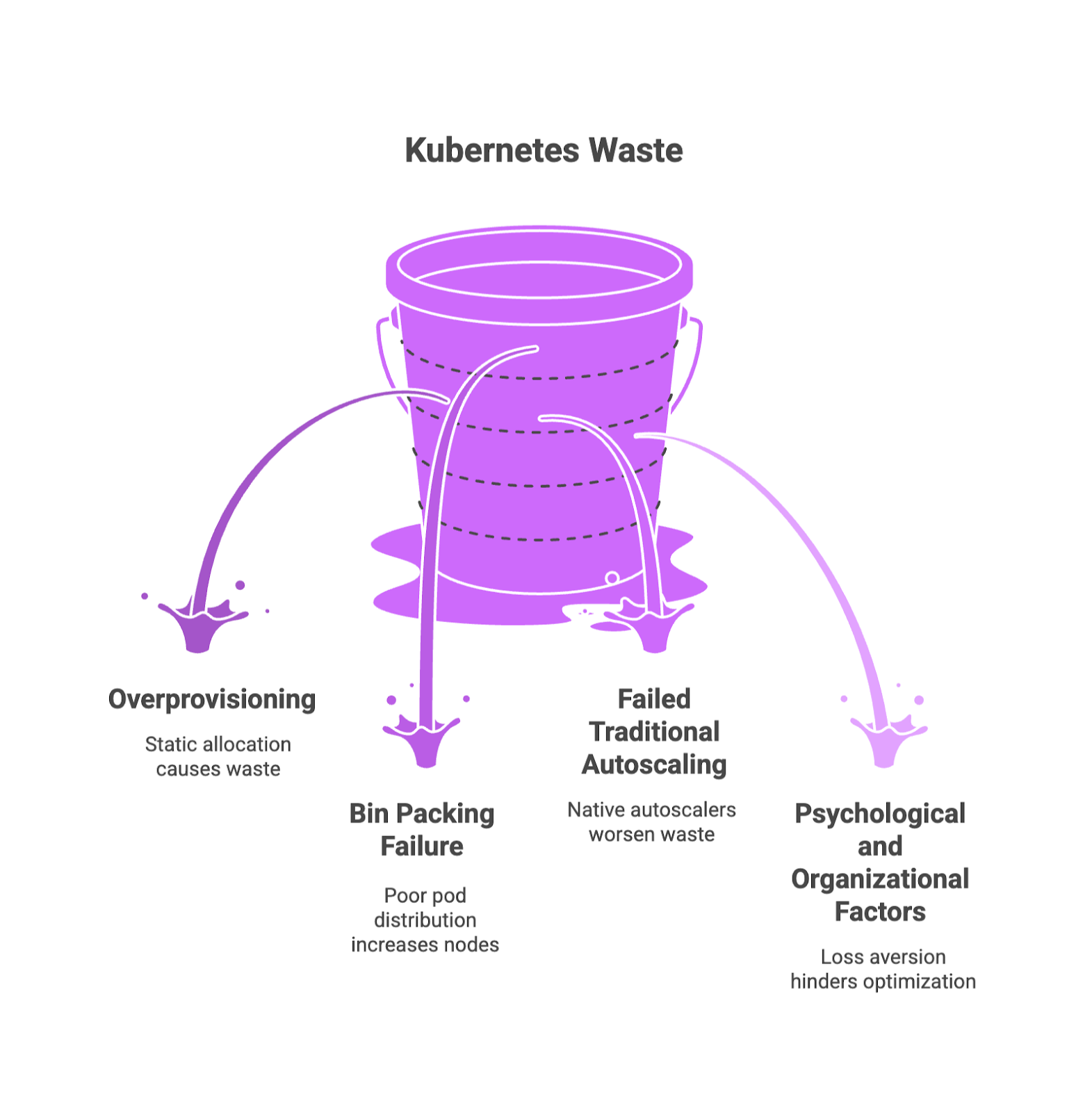

The Root Causes of Kubernetes Waste

1. Overprovisioning: The Kubernetes Default

Workloads are dynamic by nature, yet resources in Kubernetes are allocated statically. This forces developers to plan for peak times, leading to consistent overprovisioning during normal operations.

Common overprovisioning scenarios:

- JVM Services: Container-unaware defaults and off-heap memory lead to inflated requests. Fixed heap settings like Xms/Xmx are set too high, while metaspace and direct buffers push memory usage beyond heap size, causing OOM errors and oversized resource allocations.

- LLM Models: Models like DeepSeek require substantial memory when loading across multiple GPUs. After loading, memory needs drop dramatically, but those GPUs remain reserved at full capacity.

- Peak-Based Planning: Resources are sized for the busiest day of the year (like Black Friday) rather than typical daily usage, leading to 90% waste during normal operations.

2. The Kubernetes Bin Packing Problem

Kubernetes distributes pods to maximize resilience, not efficiency. The default LeastAllocated scheduler strategy spreads pods across all available nodes to "balance load," creating more nodes at 20-40% utilization instead of fewer nodes at 70% utilization.

Why bin packing fails:

- The scheduler only sees resource requests, not actual usage

- It can't predict future requirements or understand workload compatibility

- CPU-intensive batch jobs land beside memory-intensive databases, creating unusable resource fragments

- DaemonSets and topology constraints reserve fixed overhead that doesn't align with application pod shapes

Real-world example:

Given a node with 4 vCPU / 16 GiB and DaemonSets consuming 0.4 vCPU / 0.8 GiB (leaving 3.6 vCPU / 15.2 GiB usable), if your application pod needs 1 vCPU / 6 GiB:

- Two pods fit (2 vCPU / 12 GiB used)

- A third pod needs 1 vCPU / 6 GiB but only 3.2 GiB memory remains

- The autoscaler adds a new node even though 45% CPU on the first node sits idle

At scale, this pattern multiplies across hundreds of nodes, wasting massive resources.

3. Failed Traditional Autoscaling

Kubernetes native autoscalers (HPA, VPA, Cluster Autoscaler) were designed to help, but they have fundamental limitations that often make waste worse.

Vertical Pod Autoscaler (VPA) Failures:

- Restarts required: VPA resizes by evicting pods and requiring restarts, triggering cascade failures:

- SQL Pods with 8GB buffer pools take minutes to warm up, causing query timeouts

- Redis loses all cached data, forcing database hits

- Java applications suffer JVM cold starts with garbage collection spikes

- Microservices architectures experience 5-10 minutes of degraded performance

- Trailing window blindness: VPA observes an 8-day trailing window, basing recommendations on last week's patterns. It doesn't respond to seasonal changes or sudden spikes well.

Horizontal Pod Autoscaler (HPA) Failures:

- Wrong metrics: HPA reacts to CPU/memory, which don't always reflect real user load. Queue depth, latency, and request patterns matter more for many applications.

- No cost awareness: HPA scales blindly without considering node availability, binpacking, or cost impact.

- Feedback loops with VPA: When both HPA and VPA target the same metrics (CPU/memory), they create feedback loops. HPA calculates utilization as (actual usage / request) × 100. When VPA changes the request, the same usage produces different utilization, causing HPA to reverse its scaling decision, leading to constant pod restarts.

Cluster Autoscaler Limitations:

- Predefined node pools: If the right-shaped node pool isn't defined, scheduler puts pods into nearest fit instead of best fit, leaving unusable gaps

- Slow scale-down: Partial nodes block better packing because autoscaler won't drain and consolidate them fast enough

- Coarse bin-packing: Fixed node sizes mean mixed workloads don't fit well, leaving CPU or memory unusable

4. Psychological and Organizational Factors

Loss aversion: The fear of application failure outweighs "invisible" overprovisioning costs. A $10,000/month waste feels less painful than a single outage.

Optimization debt: Teams focus on shipping features rather than optimizing existing infrastructure, treating resource costs as "someone else's problem."

Lack of feedback loops: Developers never see the cost impact of their resource allocation decisions. Most organizations have a drastic disconnect between who provisions resources and who monitors finances.

Siloed responsibilities: Development teams set resource requirements, but platform/operations teams pay the bills, creating misaligned incentives.

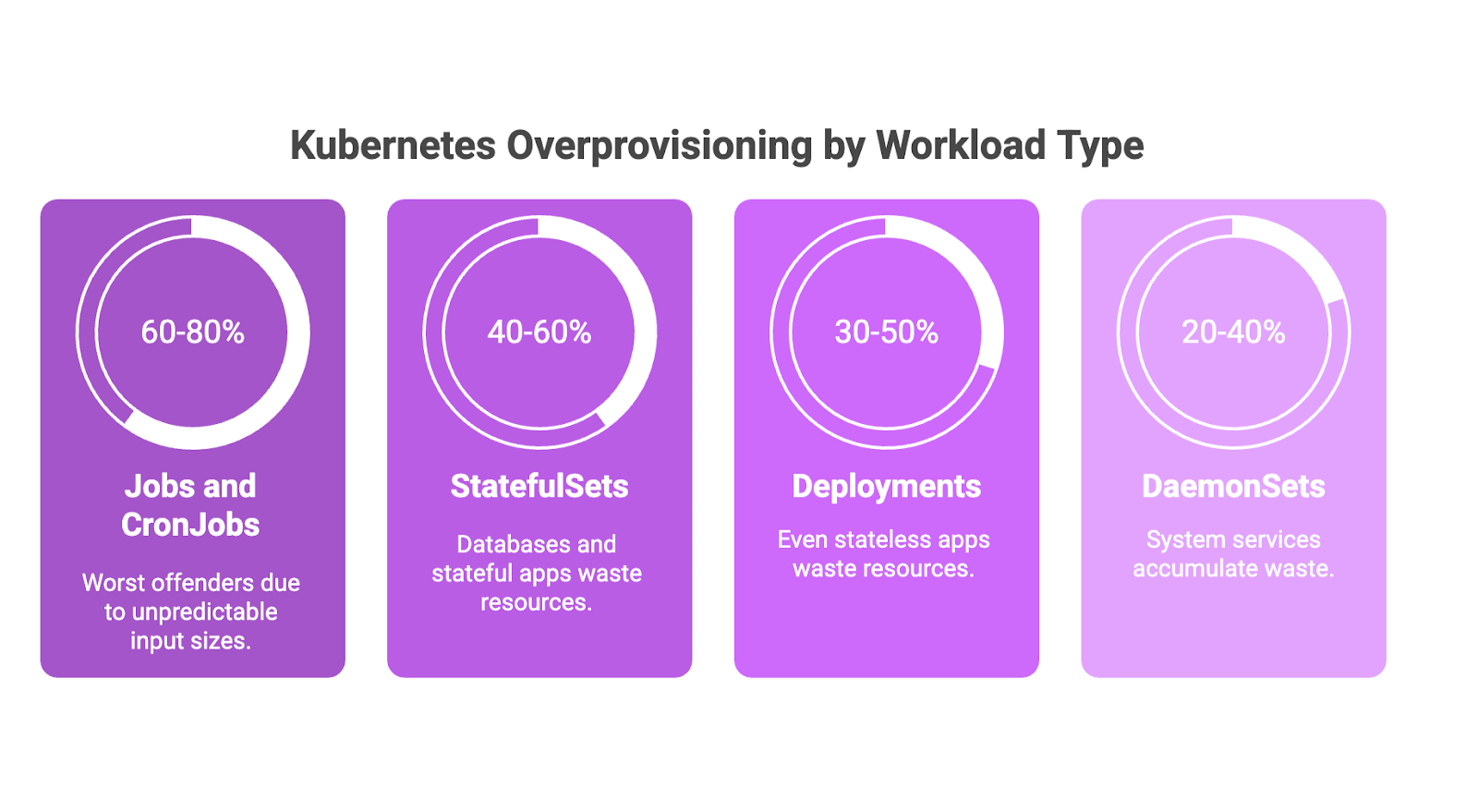

Which Workloads Waste the Most Resources

Based on analysis of 200+ production clusters, here's how different Kubernetes workload types rank for resource waste:

1. Jobs and CronJobs (60-80% average overprovisioning)

Why they're the worst offenders:

- Unpredictable input sizes: Batch processing jobs handle variable data volumes, leading to worst-case scenario resource allocation

- Conservative failure prevention: Job failures are expensive (data reprocessing, missed SLAs), so teams overprovision heavily

- Lack of historical data: Unlike long-running services, batch jobs often lack comprehensive usage history

- "Set and forget" mentality: Jobs are configured once and rarely revisited, even as data patterns change

Real-world impact: A financial services company ran nightly ETL jobs with 8 CPU cores and 32GB RAM. Actual usage averaged 1.2 CPU cores and 4GB RAM—an 85% overprovisioning rate costing $180,000 annually.

2. StatefulSets (40-60% average overprovisioning)

Why databases and stateful apps waste resources:

- Database buffer pool overallocation: DBAs allocate large buffer pools based on available memory rather than working set size

- Storage overprovisioning: Persistent volumes sized for 2-3 year projected growth rather than current needs

- Cache layer conservatism: Redis, Memcached, and Elasticsearch receive memory based on peak theoretical usage rather than actual cache hit patterns

Real-world impact: An e-commerce platform allocated 64GB RAM to PostgreSQL based on total database size. Working set was only 18GB, with buffer pool averaging 28% utilization. Right-sizing saved $8,000/month per instance.

3. Deployments (30-50% average overprovisioning)

Why even stateless apps waste resources:

- Development vs. production gap: Resource requirements from development don't reflect production patterns

- Missing autoscaling: Many deployments run with static replica counts and no HPA/VPA

- Generic resource templates: Standard templates applied across different applications without customization

- Fear of performance issues: Teams overprovision to avoid any possibility of degradation

Real-world impact: A SaaS company's API services allocated 2 CPU cores and 4GB RAM per pod. 95th percentile usage was 400m CPU and 800MB RAM. Implementing HPA and rightsizing reduced costs by 60% while improving performance.

4. DaemonSets (20-40% average overprovisioning)

Why system services accumulate waste:

- One-size-fits-all approach: Same resource allocation across heterogeneous node types

- Cumulative impact: Small per-DaemonSet waste multiplies across every node (5 DaemonSets × 100m CPU waste × 100 nodes = 50 CPU cores wasted)

- Lack of visibility: System workloads receive less monitoring attention than application workloads

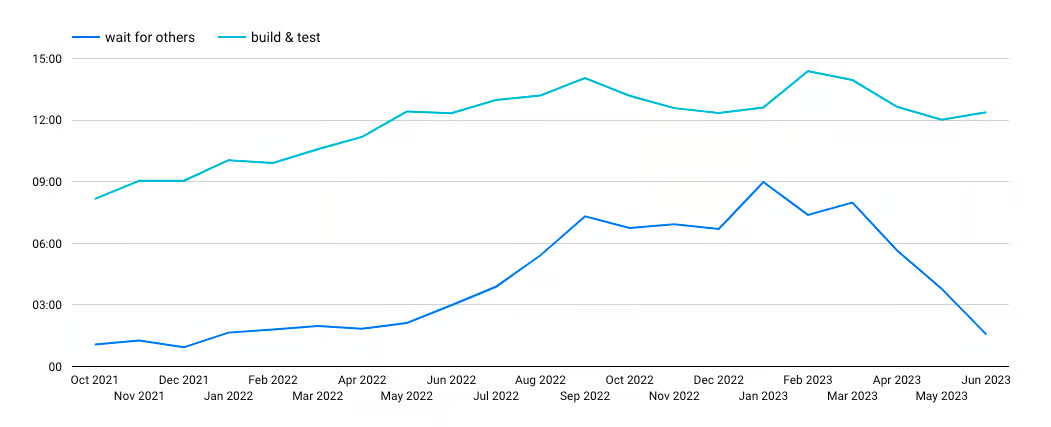

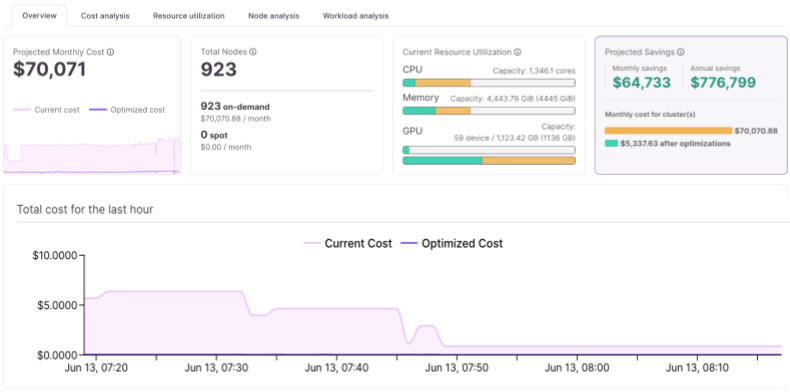

Real-world impact: A multi-tenant SaaS company ran two production clusters where DaemonSets used uniform requests across mixed node types. Rightsizing to p95 usage and excluding heavy DaemonSets from small nodes reclaimed ~42 vCPU and ~110 GiB RAM, enabling node group consolidation. Monthly compute spend fell from $70,070 to $5,337 in five weeks (92% reduction) with SLOs intact.

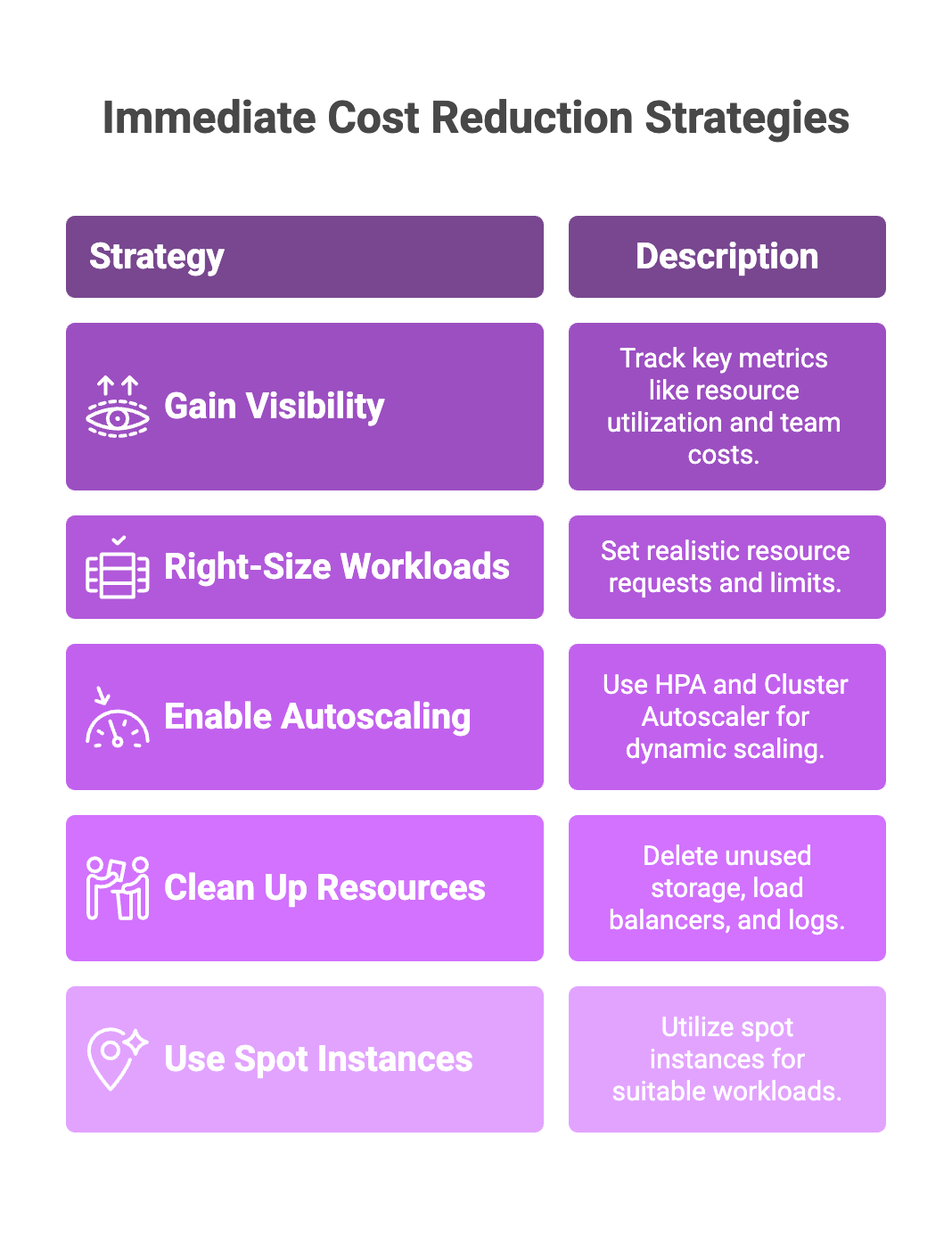

Quick Wins: Immediate Cost Reduction Strategies

Step 1: Gain Visibility Into Your Current Spend

Before optimizing, you need to understand where money is going. Track these key metrics:

- Per-pod resource utilization: Actual vs. requested CPU and memory

- Per-namespace/team costs: Which teams or services consume the most

- Node utilization trends: Are nodes running at 20% or 70%?

- Resource waste patterns: Which workloads consistently use <30% of allocated resources

Native cloud dashboards give high-level views but don't connect usage to cost impact. Use specialized Kubernetes cost monitoring tools for real-time visibility into per-pod, per-namespace, and per-team usage.

Step 2: Right-Size Your Workloads

Immediate actions:

- Review resource requests and limits:

- Set realistic CPU and memory requests based on actual usage (use p95/p99 percentiles, not peaks)

- Avoid using the same value for requests and limits—it restricts binpacking

- Revisit requests regularly, especially after scaling teams or traffic

- Fix obvious overprovisioning:

- Start with Jobs and CronJobs (highest waste)

- Profile batch jobs with representative datasets

- Create resource profiles for different input size categories

- Separate requests from limits:

- Requests determine scheduling (set based on typical usage)

- Limits prevent runaway processes (set 1.5-2x requests)

- Different values improve binpacking and cluster utilization

Step 3: Enable and Configure Autoscaling

Horizontal Pod Autoscaler (HPA):

For stateless workloads with variable traffic:

- Start with CPU target of 70-80%

- Add custom metrics for queue depth or request rate when possible

- Set appropriate min/max replica bounds

- Configure stabilization windows (15-30s scale-up, 5min scale-down)

Cluster Autoscaler:

For dynamic cluster capacity:

- Enable on each node pool to remove unused nodes automatically

- Ensure workloads don't use fixed node affinities that block rescheduling

- Balance between minimum node count (baseline availability) and autoscale flexibility

Important: Don't use HPA and VPA on the same metrics. If you must combine them, let HPA scale replicas and VPA recommend memory only.

Step 4: Clean Up Unused Resources

Persistent storage:

- Delete unused PersistentVolumeClaims, snapshots, and idle backups

- Use regional disks only when required (they cost 2x more than zonal)

- Monitor and clean up orphaned LoadBalancers or unused Ingress configs

Monitoring overhead:

- Set shorter log and metric retention for non-critical workloads

- Exclude noisy namespaces from monitoring systems

- Tune diagnostic settings to avoid collecting unused data

Step 5: Use Spot/Preemptible Instances Strategically

Ideal for:

- CI/CD pipelines

- Batch jobs and data processing

- Stateless microservices with graceful termination

Best practices:

- Create separate node pools for spot-compatible workloads

- Add tolerations so workloads can fall back to on-demand if needed

- Use committed use discounts for baseline capacity

- Combine spot with checkpoint/restore for state preservation

Cloud-specific options:

- AWS: EC2 Spot Instances (up to 90% savings)

- Azure: Spot VMs (up to 90% savings)

- GCP: Preemptible VMs (up to 80% savings)

Advanced Optimization Techniques

1. Intelligent Bin Packing

To optimize bin packing, you need to observe real-time pod usage and apply workload-specific policies:

- Consolidate background jobs easily while allocating more resources to latency-sensitive APIs

- Monitor utilization continuously and rebalance resources accordingly

- Free up underutilized nodes through live consolidation

Traditional Kubernetes requires you to choose between resilience and efficiency. Advanced platforms enable both through live migration without restarts (using technologies like CRIU), safely moving workloads from underutilized nodes to fuller ones while preserving SLAs.

2. Automated Rightsizing Without Restarts

Manual rightsizing doesn't scale. The "set once, forget forever" approach leads to drift as workloads evolve.

What you need:

- Continuous observation of live and historical usage

- Automatic rewriting of resource allocations based on patterns

- No pod restarts or service disruption during optimization

- Protection against OOM errors and pod failures

Advanced rightsizing uses machine learning (like XGBoost forecasting) to predict future resource needs, avoiding inflated baselines for workloads that spike at startup (like JVM applications).

3. Proactive Scaling

Traditional autoscalers react to current or past usage. Proactive scaling predicts what's ahead:

- Combine recent trends with historical load to warm up capacity before spikes

- Avoid HPA cold starts or cascade restarts during demand surges

- Handle seasonal patterns (holiday traffic, end-of-month processing)

- Prevent overprovisioning before it happens

This is especially critical for workloads that spike at startup and stabilize later (JVM-based apps, ML model loading), where traditional autoscalers lock in inflated baselines.

4. Quality of Service Optimization

Not all workloads need the same reliability guarantees. Optimize QoS classes strategically:

- Guaranteed: Critical services with predictable resource needs

- Requests = Limits

- Highest priority, never evicted

- Use for databases, payment processing, critical APIs

- Burstable: Services with variable but bounded resource usage

- Requests < Limits

- Most production workloads fit here

- Can burst during spikes, throttled when resources are scarce

- BestEffort: Non-critical batch workloads

- No requests or limits set

- Lowest priority, first to be evicted

- Use for development environments, experimental workloads, non-critical batch jobs

5. Resource Quotas and Governance

Implement namespace-level controls to prevent overprovisioning:

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-quota

namespace: production

spec:

hard:

requests.cpu: "100"

requests.memory: "200Gi"

limits.cpu: "200"

limits.memory: "400Gi"

persistentvolumeclaims: "10"Governance best practices:

- Set quotas per namespace/team

- Implement approval processes for quota increases

- Create resource allocation guidelines and training

- Establish regular optimization reviews

Autoscaling Best Practices

Understanding the Autoscaling Stack

Kubernetes supports multiple autoscaling layers:

Horizontal Pod Autoscaler (HPA): Adjusts number of pod replicas

- Ideal for: Stateless workloads with variable traffic

- Supports: CPU, memory, custom metrics (queue depth, request rate)

- Use when: You need to scale pods based on load

Vertical Pod Autoscaler (VPA): Adjusts CPU/memory requests per pod

- Ideal for: Batch jobs, stateful services that don't scale horizontally

- Limitation: Often requires pod restarts unless using live migration with CRIU for example.

- Use when: Workload can't scale out and needs per-pod tuning

Cluster Autoscaler (CA): Adjusts number of nodes

- Ideal for: Dynamic cluster capacity management

- Works with: AWS EKS, Azure AKS, Google GKE

- Use when: Workloads exceed node capacity or you want to minimize idle nodes

KEDA: Event-driven autoscaling

- Ideal for: Queue-based workloads, scheduled tasks

- Supports: 40+ scalers (Kafka, SQS, Azure Service Bus, Prometheus, etc.)

- Use when: You need to scale based on queue length or custom business signals

HPA Configuration Best Practices

1. Choose the right metrics:

- Don't rely on CPU alone—it's often a poor proxy for user load

- Use custom metrics for queue depth, latency (p95/p99), request rate

- Combine multiple metrics when appropriate

2. Configure stabilization windows:

- Scale-up: 15-30 seconds (faster response to load)

- Scale-down: 5 minutes (prevent flapping)

- In Kubernetes 1.33+: Use configurable tolerance for fine-tuned control

3. Set appropriate bounds:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: webapp-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: webapp

minReplicas: 3 # Baseline for availability

maxReplicas: 50 # Cost control

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

behavior:

scaleUp:

stabilizationWindowSeconds: 30

policies:

- type: Percent

value: 100

periodSeconds: 15

scaleDown:

stabilizationWindowSeconds: 300

policies:

- type: Percent

value: 50

periodSeconds: 30VPA Configuration Best Practices

1. Start with recommendation mode:

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: my-app-vpa

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: my-app

updatePolicy:

updateMode: "Off" # Recommendations only2. Use selective automation:

- Enable automatic updates only for non-critical workloads

- Monitor recommendations for a week before enabling

- Set min/max bounds to prevent extremes

3. Coordinate with HPA:

- If using both, HPA should manage CPU scaling

- VPA should handle memory only

- Alternative: Use VPA in "Off" mode for recommendations while HPA handles live scaling

Cluster Autoscaler Configuration

1. Configure proper node pools:

- Define multiple node pools for different workload types

- Include node size diversity to improve bin packing

- Set appropriate min/max node counts

2. Tune scale-down behavior:

--scale-down-delay-after-add=10m

--scale-down-unneeded-time=10m

--scale-down-utilization-threshold=0.53. Handle special cases:

- Use pod disruption budgets (PDBs) carefully—they can block scale-down

- Add appropriate node affinities for specialized workloads (GPU, high-memory)

- Monitor scale-up/down latency and adjust timeouts accordingly

Cloud-Specific Optimization

AWS EKS Cost Optimization

1. Right-size EC2 instances:

- Use AWS Compute Optimizer recommendations

- Consider Graviton instances (up to 40% price-performance improvement)

- Match instance types to workload shapes (compute vs. memory optimized)

2. Leverage Karpenter for smarter provisioning:

- Automatically selects optimal instance types

- Faster scale-up than Cluster Autoscaler

- Better bin packing through flexible instance selection

- Works across AZs and instance families

3. Use Savings Plans and Reserved Instances:

- Apply 1- or 3-year commitments to steady workloads

- Analyze usage trends before committing

- Combine with Spot for variable workloads

4. Optimize data transfer:

- Keep traffic within the same AZ when possible

- Use VPC endpoints for AWS service access

- Monitor and optimize cross-region data transfer

Azure AKS Cost Optimization

1. Choose the right VM SKUs:

- Use Standard_D/E series for general workloads

- Dv4/Ev4 for compute-intensive

- Azure Spot VMs for dev/test and fault-tolerant workloads

2. Enable AKS cluster autoscaler:

- Configure per node pool

- Set appropriate min/max node counts

- Ensure workloads don't block rescheduling (check node affinities)

3. Optimize Azure Monitor costs:

- Set shorter log retention for non-critical workloads

- Exclude noisy namespaces from Container Insights

- Tune diagnostic settings to collect only what's needed

4. Use availability zones strategically:

- Zone-redundant configurations cost more

- Use only for production, critical workloads

- Single-zone for dev/test environments

Google GKE Cost Optimization

1. Choose deployment mode wisely:

- Autopilot: Google manages infrastructure, you pay per pod

- Good for: Hands-off management, unpredictable workloads

- Trade-off: Less control, potentially higher costs for stable workloads

- Standard: You manage nodes, more control

- Good for: Optimized configurations, stable workloads

- Trade-off: More operational overhead

2. Use Committed Use Discounts:

- 1-year: 37% discount

- 3-year: 55% discount

- Apply to baseline, predictable capacity

3. Optimize persistent storage:

- Use Standard Persistent Disks for non-critical data

- SSD only when IOPS requirements justify cost

- Delete unused PVs and snapshots regularly

4. Configure preemptible VMs:

- Up to 80% savings vs. regular VMs

- Good for batch processing, CI/CD

- Combine with node auto-provisioning for flexible capacity

Implementing Continuous Cost Optimization

The Traditional Approach (Manual)

Phase 1: Assessment (Week 1-2)

- Deploy resource monitoring across all workload types

- Identify most overprovisioned workloads

- Calculate current waste and potential savings

- Prioritize optimization efforts by impact

Phase 2: Quick Wins (Week 3-4)

- Implement HPA for suitable deployments

- Right-size obviously overprovisioned jobs and CronJobs

- Configure resource quotas to prevent future waste

- Deploy VPA in recommendation mode

Phase 3: Advanced Optimization (Week 5-8)

- Implement custom metrics scaling

- Optimize DaemonSet resource allocation

- Deploy comprehensive cost monitoring

- Establish ongoing optimization processes

Phase 4: Governance and Culture (Ongoing)

- Create resource allocation guidelines

- Implement approval processes for resource changes

- Train teams on optimization best practices

- Establish regular optimization reviews

Challenges with manual optimization:

- Requires ongoing manual effort and expertise

- Resource requests drift over time as workloads evolve

- Difficult to maintain across multiple clusters

- No protection during deploys or traffic spikes

The Automated Approach

Modern Kubernetes optimization platforms solve these challenges through continuous, automated optimization:

1. Live rightsizing:

- Automatically adjusts CPU and memory requests as workloads run

- No pod restarts or redeploys required

- Prevents overprovisioning and complements HPA/VPA without conflicts

2. Intelligent bin packing:

- Consolidates workloads onto fewer nodes

- Rebalances in real time as usage changes

- Frees up unused capacity and reduces VM count

3. Zero-downtime live migration:

- Moves containers between nodes without restarts

- Preserves state using snapshot + restore (CRIU)

- Enables node consolidation without service disruption

4. Predictive scaling:

- Uses machine learning to forecast future resource needs

- Warms up capacity before spikes

- Avoids HPA cold starts and cascade restarts

5. Automated instance selection:

- Selects optimal VM types based on actual workload shape

- Adapts as traffic patterns evolve

- Maximizes savings across regions and availability zones

6. GPU optimization:

- Multi-tenancy through MIG (Multi-Instance GPU)

- Live rightsizing for ML workloads

- Checkpoint/restore for GPU containers

Measuring Success

Key Performance Indicators

Track these metrics to measure optimization effectiveness:

Utilization metrics:

- Cluster utilization: Target >60% CPU, >70% memory

- Node utilization: ~70%-80%

- Resource efficiency ratio: 80%-90%

Cost metrics:

- Cost per workload: Monthly spend per service

- Cost per team/namespace: Track accountability

- Total monthly infrastructure spend: Track overall trend

- Cost per request/transaction: Business-aligned efficiency

Operational metrics:

- Optimization coverage: Percentage of workloads with proper sizing

- Time to optimization: Speed of adjusting to new patterns

- Stability indicators: OOM events, pod evictions, throttling

Monitoring and Alerting

Set up proactive alerts for:

Cost anomalies:

- Workloads with <30% resource utilization for >7 days

- Monthly cost increases >10%

- New deployments without resource requests/limits

- Cluster utilization dropping below targets

Stability issues:

- OOM kills increasing

- Pod evictions due to resource pressure

- Autoscaler thrashing (rapid scale up/down cycles)

- Nodes unable to schedule pending pods

Expected Results

Based on documented case studies and industry data, organizations implementing comprehensive optimization typically achieve:

- 40-70% reduction in compute costs

- 30-60% improvement in cluster utilization

- Improved application performance through better resource density

- Better resource planning and capacity management

- Enhanced cost visibility and accountability

- 30-90 day payback period on optimization efforts

Real-world examples:

- Financial services company: Reduced ETL job costs by 85%, saving $180,000 annually

- E-commerce platform: Saved $8,000/month per database instance through rightsizing

- SaaS company: 60% cost reduction while improving API performance

- Cybersecurity data platform: 50% compute reduction in 24 hours, 80% in 5 days

- AI/ML platform: 80% workload cost reduction in 12 hours

Conclusion: The Path to Efficient Kubernetes

Kubernetes overprovisioning isn't just a cost problem—it's a systematic issue rooted in how Kubernetes was designed. The platform prioritizes resilience and developer experience over resource efficiency, making waste feel normal.

But this waste is preventable. Through proper monitoring, intelligent rightsizing, smart autoscaling, and continuous optimization, you can eliminate the majority of Kubernetes waste while improving application performance and reliability.

The key principles:

- Treat optimization as ongoing practice, not a one-time project

- Gain visibility into actual resource usage vs. allocated resources

- Right-size workloads based on real usage patterns, not guesswork

- Automate where possible to maintain efficiency as workloads evolve

- Use the right autoscaler for each workload type

- Monitor cost impact of scaling and resource decisions

- Establish governance to prevent waste from creeping back in

When manual optimization isn't enough:

For teams running large-scale Kubernetes deployments across multiple clusters, manual optimization becomes unsustainable. Resource requests drift, workloads shift unpredictably, and nodes sit partially idle despite best efforts.

This is where platforms like DevZero become invaluable. Through live rightsizing, intelligent bin packing, zero-downtime migration, and predictive scaling, DevZero delivers 40-80% cost reductions without requiring teams to rewrite applications or manually tune every workload.

Ready to reduce your Kubernetes spend?

Start by gaining visibility into your current resource usage and waste patterns. Identify quick wins like overprovisioned jobs and missing autoscalers. Then decide whether to pursue manual optimization or leverage automation to maintain efficiency continuously.

The best time to start optimizing was when you first deployed Kubernetes. The second best time is now.

Frequently Asked Questions

Q: How much can I realistically save on Kubernetes costs?

A: Most organizations achieve 40-70% cost reduction through comprehensive optimization. Quick wins like rightsizing Jobs/CronJobs and enabling autoscaling can deliver 20-30% savings in the first month. Advanced techniques like intelligent bin packing and live migration unlock additional 30-50% savings.

Q: Will cost optimization affect application performance?

A: When done correctly, optimization often improves performance. Better bin packing reduces network latency by consolidating related services. Right-sizing based on actual usage eliminates resource contention. The key is maintaining appropriate headroom and using gradual optimization policies.

Q: Should I use HPA, VPA, or both?

A: It depends on your workload:

- Use HPA for stateless workloads that scale horizontally (web apps, APIs)

- Use VPA for workloads that don't scale horizontally well (databases, stateful apps)

- Don't use both on the same metrics—they create feedback loops

- Consider using VPA in "Off" mode for recommendations while HPA handles scaling

Q: How do I start optimizing without disrupting production?

A: Follow this safe approach:

- Start with visibility—monitor usage for 1-2 weeks

- Identify obvious waste (workloads using <30% of allocated resources)

- Begin with non-production environments

- Use gradual rollouts and canary deployments for production

- Monitor stability indicators (OOM events, latency) closely during changes

Q: What's the difference between Cluster Autoscaler and Karpenter?

A: Cluster Autoscaler works with predefined node pools and scaling groups, operating at the infrastructure level. Karpenter is more application-aware, automatically selecting optimal instance types based on pod requirements. Karpenter typically provides faster scale-up, better bin packing, and more cost-efficient instance selection, but requires more cloud provider integration.

Q: How often should resource requests be reviewed and updated?

A: Continuously. Set up automated monitoring to flag workloads with consistent usage below 50% of allocated resources. Review and update manually at least quarterly, or whenever workload patterns change significantly (new features, traffic changes, architectural shifts).

Q: Can I optimize GPU workloads the same way?

A: GPU optimization requires specialized approaches:

- Use GPU multi-tenancy (MIG on NVIDIA GPUs) to share expensive GPU resources

- Implement time-sharing for sequential workloads

- Monitor GPU utilization closely—idle GPUs are extremely expensive

- Consider fractional GPU allocation for inference workloads

- Use checkpoint/restore to enable live migration of GPU workloads

Q: What happens if I set resource requests too low?

A: Risks include:

- OOM (Out of Memory) kills if memory limits are exceeded

- CPU throttling if CPU limits are hit

- Pod eviction if node resources are exhausted

- Performance degradation under load

Mitigation: Set requests based on p95/p99 usage, not average. Monitor OOM events and throttling. Use limits 1.5-2x higher than requests as safety buffer.

Q: How do I convince my team to invest time in cost optimization?

A: Make the business case:

- Calculate current waste in dollar terms

- Show potential savings (typically 40-70% of Kubernetes infrastructure)

- Calculate ROI period (usually 30-90 days)

- Highlight non-cost benefits: improved performance, better resource planning

- Start with quick wins to demonstrate value

- Automate optimization to minimize ongoing team effort

Additional Resources

Kubernetes Cost Analysis:

- The Cost of Kubernetes: Which Workloads Waste the Most Resources

- Kubernetes Workload Types: When to Use What

- Which Kubernetes Workloads to Use and When

Autoscaling Guides:

- Kubernetes Autoscaling: How HPA, VPA, and CA Work

- Kubernetes HPA: Scale Pods Based on Resource Usage

- Kubernetes VPA: Rightsize Pod Resource Requests

- Kubernetes Cluster Autoscaler: Scale Nodes When Pods Don't Fit

Cloud-Specific Guides:

- AKS Cost Optimization Guide: How to Reduce Azure Kubernetes Costs

- GKE Cost Optimization: 9 Tips for 2025

- EKS Cost Optimization: Best Practices and Tips

Advanced Topics:

- Container Checkpoint/Restore with CRIU

- What Makes DevZero Different

- Karpenter vs. Cluster Autoscaler: How They Compare

About DevZero: DevZero is a Kubernetes cost optimization platform that helps companies reduce cloud infrastructure costs by 40-80% through live rightsizing, intelligent bin packing, and zero-downtime workload migration. Unlike traditional autoscalers, DevZero operates at cluster, node, and workload levels simultaneously, using predictive ML to optimize resources before waste occurs. Get started with a free cost assessment.

.webp)