What Is GPU Waste?

AI teams are moving fast—but their GPU bills are moving faster. As models grow and workloads shift between training, batch jobs, and real-time inference, clusters accumulate “always-on” capacity that rarely runs at full tilt. The result is a quiet drain: GPUs sitting loaded and ready, replicas idling for spikes that never arrive, and large instances chosen “just to be safe.”

GPU waste represents the systematic underutilization of expensive resources in Kubernetes clusters running AI and machine learning workloads. Unlike CPU or memory inefficiencies that might waste thousands of dollars monthly, GPU waste typically burns through tens or hundreds of thousands of dollars due to the 10-50x higher cost of GPU infrastructure.

The average GPU-enabled Kubernetes cluster operates at 15-25% utilization. For a single NVIDIA H100 at $30-50/hour, a 50-GPU cluster running at 20% utilization wastes over $200,000 annually on a single cluster. This waste manifests as models loaded in memory receiving no requests, over-replicated services across environments, idle research workloads, and training jobs without proper checkpointing.

This guide gives you a pragmatic way to see where the waste is coming from and how to cut it—without sacrificing performance. We’ll walk through common workload patterns, how to measure what matters, and the practical steps to right-size, scale smartly, and recover costs while maintaining reliability.

In this guide:

- Understanding GPU Workload Patterns

- Why GPU Waste Happens

- Measuring GPU Utilization

- Strategies to Fix GPU Waste

- Implementation Best Practices

- DevZero Platform

Understanding GPU Workload Patterns

GPU utilization challenges stem from diverse and unpredictable machine learning workloads. Unlike traditional applications with consistent resource patterns, ML workloads exhibit dramatically different characteristics.

Training Workloads run for hours to days, consume 60-120GB GPU memory for large models, achieve 70-95% compute utilization during active training, and face interruption costs where jobs must restart from scratch without checkpoint/restore capabilities.

Real-Time Inference needs to keep latency under 100ms (p99), handle variable request rates following business hours, and deal with cold start penalties of 30 seconds to 5 minutes for model loading. Because requests are often sparse, GPUs typically run at only 20-40% GPU utilization.

Batch Inference processes large volumes asynchronously, tolerates higher latency, achieves 60-90% utilization through large batching, and runs on schedules providing optimization opportunities.

Research Workflows exhibit the most irregular patterns with 10-15% GPU usage over time due to long analysis periods, code modification, and overnight idle time while environments remain reserved.

.png)

Why GPU Waste Happens

Over-Replication

The most common waste source: teams deploy 8 replicas of an inference service across production, staging, and development. DCGM metrics show all replicas with models loaded in GPU memory (90% VRAM utilization), but compute utilization reveals only 1-2 replicas receiving requests while 6 idle. This pattern multiplies: 8 production + 8 staging + 8 development = 24 GPUs when only 2-3 are needed across all environments, representing 87-92% waste.

Training Interruptions

A 12-hour training run on 8× H100 GPUs costs $7,680. If interrupted at hour 20 without checkpointing, the job must restart from scratch, wasting $6,400 and requiring a total spend of $14,080 (83% increase). This risk incentivizes over-provisioning to minimize interruptions, avoid cost-effective spot instances, and hold GPUs idle—creating systematic underutilization.

Base run:

- 8× H100 GPUs

- Cost per hour: $640

- 12-hour run cost: 12 x 640 = $7,680

Interruption scenario (no checkpointing):

- Interrupted at hour 20

- Wasted cost:

20 x 640 = $12,800 - But only 12 hours were needed, so waste relative to required runtime:

(20 - 12) x 640 = $6,400

Total cost to complete training after restart:

7,680 (original run) + 6,400 (waste) = 14,080

Cost increase:

(14,080-7680)/7,680 ~= 83%

Summary:

Training interruptions can drive 80%+ cost inflation, pushing teams to over-provision capacity and contributing to chronic GPU underutilization.

Cold Start Tax

Large model inference faces 110-290 seconds from pod start to first request, including model download (30-90s), GPU memory allocation (10s), model loading to VRAM (60-180s), and warmup (5-15s). Teams over-provision replicas to avoid scale-up latency, resulting in multiple idle replicas "ready for spikes" while traffic patterns show spikes are rare and replicas idle 90% of time.

Instance Mis-sizing

Teams default to largest GPUs without analyzing requirements.

Model Requirement vs. GPU Capacity Example:

- Model VRAM needed: 58 GB

- H100 VRAM provided: 141 GB

- Unused VRAM: 141 - 58 = 83 GB

- Waste: 83/141 ~= 59%

Cost Comparison

- H100 cost: 2.5–3× the cost of an A100-80GB

- A100-80GB capacity: sufficient for a 58 GB workload

- Unnecessary cost: ~50-60% (from running on H100 instead of A100)

Summary:

H100-class GPUs show substantial VRAM underutilization for mid-sized models, resulting in roughly 50-60% unnecessary costs compared to appropriately sized alternatives.

Ancillary Resource Waste

GPU instances bundle substantial CPU and RAM that go unutilized. p5.48xlarge Instance (8× H100 GPUs)

- Compute: 192 vCPUs

- Observed use: 15–30 vCPUs

- Utilization: (15-30)/192 ~= 8%-16%

- Waste: 84%-92%

- Memory: 2 TB RAM

- Observed use: 700–900 GB

- Utilization: (700-900)/2000 ~= 35%-45%

- Waste: 55%-65%

- Cost: $320 per hour

Summary:

Non-GPU resources show 75–85% average waste, driven by low vCPU and RAM consumption relative to instance capacity.

Wondering how much you're wasting? Most teams are shocked when they see their actual utilization data. Get a free GPU waste assessment with detailed analysis of your cluster.

Measuring GPU Utilization

Traditional nvidia-smi provides only point-in-time snapshots. Effective measurement requires the NVIDIA Data Center GPU Manager (DCGM) integrated with Kubernetes.

DCGM Foundation

DCGM provides persistent metric collection at 1-second intervals, active health monitoring, policy-based alerts, cluster-wide aggregation, and Prometheus integration. The NVIDIA GPU Operator simplifies deployment with automated installation across all GPU nodes.

Critical Metrics

GPU Compute Utilization (DCGM_FI_DEV_GPU_UTIL) measures percentage of time at least one kernel executed on GPU.

Interpretation: 90-100% excellent, 70-89% good, 50-69% moderate with optimization potential, 30-49% poor, 0-29% severe underutilization requiring immediate action.

GPU Memory Utilization (DCGM_FI_DEV_FB_USED/FREE) measures VRAM allocated versus free.

Critical distinction: high memory utilization does NOT mean high GPU utilization.

Example: 90% memory (128GB model loaded) with 5% compute (receiving no requests) indicates classic over-replication—models sitting idle.

Multi-Dimensional Analysis

Analyzing memory and compute together reveals patterns:

- High memory + low compute = Over-replication (scale down replicas)

- High compute + low memory = Potential for smaller GPU SKUs

- Low memory + low compute = Idle resources (reclaim immediately)

- High memory + high compute = Well-optimized workload

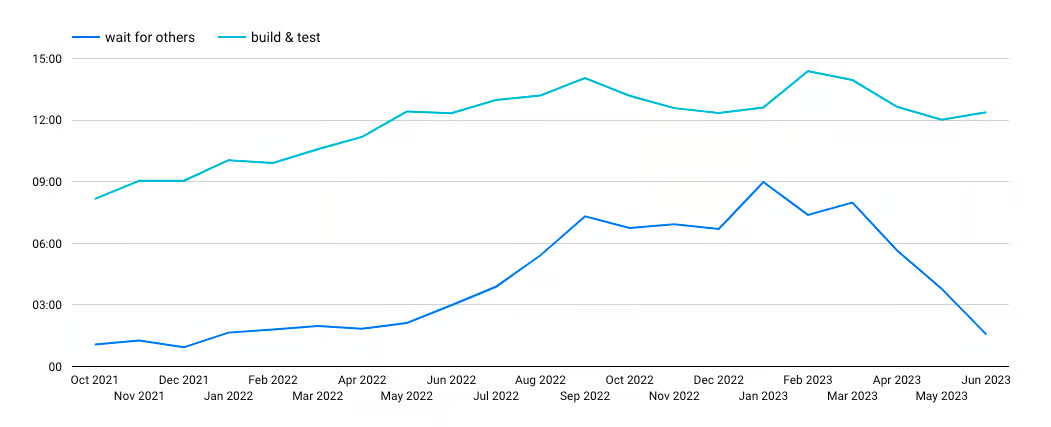

Temporal Patterns

Daily patterns for inference services show 5-10% utilization overnight, 40-60% morning ramp-up, 50-75% peak business hours, 30-45% evening decline, 8-15% night.

Optimization: scale down 6PM-8AM (14 hours daily) for 58% reduction in overnight GPUs.

Weekly patterns for batch jobs might show Monday training (85% utilization), idle Tuesday-Wednesday (0%), Thursday batch inference (70%), idle Friday-weekend. Weekly average: 18%.

Optimization: use spot instances, schedule efficiently, release GPUs between runs.

Cost Attribution

Integrating DCGM with Kubernetes metadata enables workload-specific analysis. Track GPU hours by namespace, calculate costs assuming standard hourly rates for GPU types, identify wasted hours where utilization falls below 20% threshold, and attribute spending to teams and applications for accountability.

Strategies to Fix GPU Waste

For additional optimization strategies, see our comprehensive guide on tips for optimizing GPU utilization in Kubernetes.

Training: Checkpoint/Restore

CUDA Checkpoint with CRIU technology snapshots running training containers with complete state including GPU memory and moves them to other nodes. This enables:

- 60-80% cost reduction via spot instances (safely use $12/hour spot vs $40/hour on-demand)

- Resume from checkpoint vs restarting (day 3 vs day 0 for interrupted jobs)

- Automatic job migration during maintenance without work loss

- Workload mobility for better cluster scheduling

Cost impact:

Without checkpoint on on-demand instances, 7-day training costs $53,760. If interrupted day 5, total becomes $107,520.

With checkpoint on spot instances, base cost is $16,128. If interrupted day 5, resume costs $4,608 additional for total of $20,736—81% reduction.

Inference: Right-Sizing and Replica Optimization

Collect 7-day baseline showing per-replica GPU utilization, request rates, and memory usage. Analysis might reveal 8 replicas where only 2 receive meaningful traffic (42% and 38% utilization) while 6 show 0-3% utilization—all with 88% VRAM (models loaded but idle).

Graduated Scaling: Phase 1 reduces 8→4 replicas with zero-downtime rolling updates, monitoring 48-72 hours for latency degradation or GPU saturation. If successful, Phase 2 reduces 4→2 replicas with continuous monitoring and rollback capability.

Cost Impact: Removing 6 idle GPUs at $40/hour saves $175,200/month.

Horizontal Pod Autoscaling: Implement HPA targeting 70% GPU utilization and 300ms p99 latency. Configure min 2 replicas, max 6, conservative scale-down (5min stabilization, max 25% reduction at a time), aggressive scale-up (1min stabilization, can double quickly if needed). Provides cost efficiency during low-traffic with responsiveness during spikes.

Instance Right-Sizing

Analyze peak memory usage over 7-14 days, add 20% headroom, map to GPU SKUs. Example: 58.3GB peak usage → 70GB recommended → A100-80GB at $16,060/month vs current H100 at $29,200/month. Savings: $13,140/month (45% reduction).

Migration: test in staging, run comprehensive load tests, implement canary deployment (10% traffic to A100), monitor 48 hours, progressively increase A100 weight (10%→25%→50%→75%→100%) while monitoring for degradation.

Multi-Instance GPU (MIG)

MIG partitions A100/H100 into smaller isolated instances with guaranteed QoS. H100 (141GB) configurations include 7× 1g.20gb (maximum multi-tenancy), 3× 3g.60gb (balanced for mid-size models), or 2× 4g.80gb (large models). Learn more about GPU multi-tenancy strategies.

Use case: 7 small inference services each using under 15GB VRAM. Current: 7× dedicated H100 = $280/hour with 10-15% utilization each (severe waste). MIG solution: 1× H100 partitioned into 7× 20GB instances = $40/hour. Savings: $240/hour = $172,800/month (86% reduction) with maintained isolation.

Time-Based Autoscaling

Schedule-based autoscaling leverages temporal patterns: Configure business hours (8AM-6PM Mon-Fri) for 6 replicas, off-hours (6PM-8AM Mon-Fri) for 2 replicas, weekends for 1 replica.

Cost Impact: Previous 8 replicas × 24 hours × 7 days = 1,344 GPU-hours/week.

Optimized: business hours 300, off-hours 140, weekends 48 = 488 GPU-hours/week total. Reduction: 1,344→488 = 63.7% reduction saving 856 GPU-hours × $40 = $34,240/week = $1,780,480/year.

Implementation Best Practices

Phase 1: Assessment (Week 1-2)

Deploy DCGM Exporter via GPU Operator, configure Prometheus with 30-day retention, import Grafana dashboards. Validate data collection from all GPUs. Allow minimum 7 days baseline collection capturing weekly patterns. Resist optimization changes during baseline—focus on observation.

Phase 2: Quick Wins (Week 3-4)

Non-production optimization: Audit dev/staging/QA replica counts. Scale development to 1-2 replicas max, staging to 2-3 replicas matching test load, QA to 1 replica for automated testing. Typical result: 60-80% reduction in non-prod GPU usage with zero production risk.

Idle workload cleanup: Identify GPUs with under 5% utilization over 7 days and zero-traffic inference services. Delete or hibernate immediately—pure waste with zero business value.

Enable spot instances: Convert training jobs to spot/preemptible instances (60-80% cheaper) with checkpoint/restore for resilience. Single change delivers massive training cost savings.

Phase 3: Production Optimization (Week 5-8)

Week 5-6: Implement gradual replica scale-down (e.g., 8→4 replicas) with rolling updates, zero downtime. Monitor continuously with automated alerts for latency degradation (p99 > 500ms) and GPU saturation (avg > 85%).

Week 7-8: Further reduction if Phase 1 successful (e.g., 4→2 replicas). Implement HPA with GPU utilization and latency metrics, min/max replica bounds, and appropriate scaling behaviors.

Phase 4: Advanced Features (Week 9-12)

Implement checkpoint/restore for training with spot instances, automated hourly checkpoints, S3/GCS backup, automatic restore capability. Deploy MIG for small model inference enabling multiple services per GPU with hardware isolation. Configure schedule-based autoscaling for predictable usage patterns.

Phase 5: Governance and Continuous Improvement

Establish FinOps processes with automated weekly cost reports showing GPU hours, costs, utilization, waste estimates, top consumers, optimization opportunities, and ROI achieved. Implement resource request approval requiring justification labels, cost center attribution, workload type classification.

Set team-level targets tracking target utilization %, current utilization %, monthly budget, current spend, and required actions to create organizational accountability beyond platform team responsibility.

Success Metrics

Track average GPU utilization (baseline 18% → target 60%), cost per useful GPU-hour (baseline $217 → target $67), total monthly spend (baseline $450K → target $180K), and workload efficiency by type (training 70% target, inference 60%, research 40%, batch 70%).

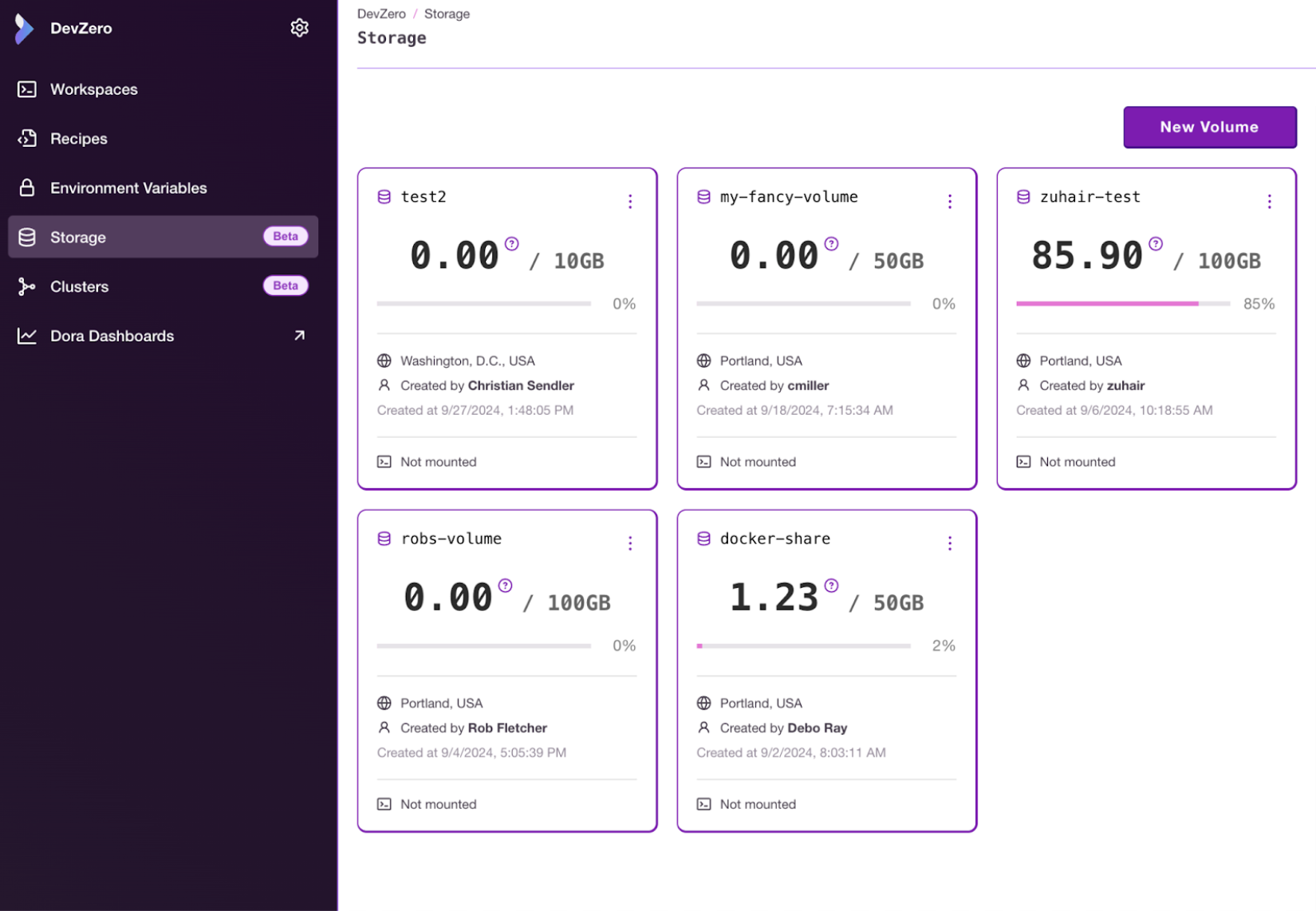

DevZero Platform

DevZero provides automated GPU cost optimization through continuous monitoring, analysis, and rightsizing.

Automated Discovery

Real-time DCGM monitoring every few seconds, integrated CPU/memory/GPU analysis, ML models automatically identifying waste patterns, 30-90 day historical trend analysis. Automatically detects over-replicated services (high VRAM, low compute), undersized workloads (low VRAM on expensive SKUs), idle resources (under 10% utilization 48+ hours), missing checkpointing (training on expensive on-demand), temporal misalignment (constant replicas despite usage patterns).

Continuous Optimization

Five-phase loop:

- Monitor - every 10s: DCGM, cAdvisor, K8s metrics

- Analyze - every 5min: ML pattern detection, baseline comparison

- Recommend - real-time: optimization suggestions with ROI/risk

- Execute - automated for non-prod/low-risk, approval for production

- Validate - continuous: monitor post-change, automatic rollback if degradation, update models.

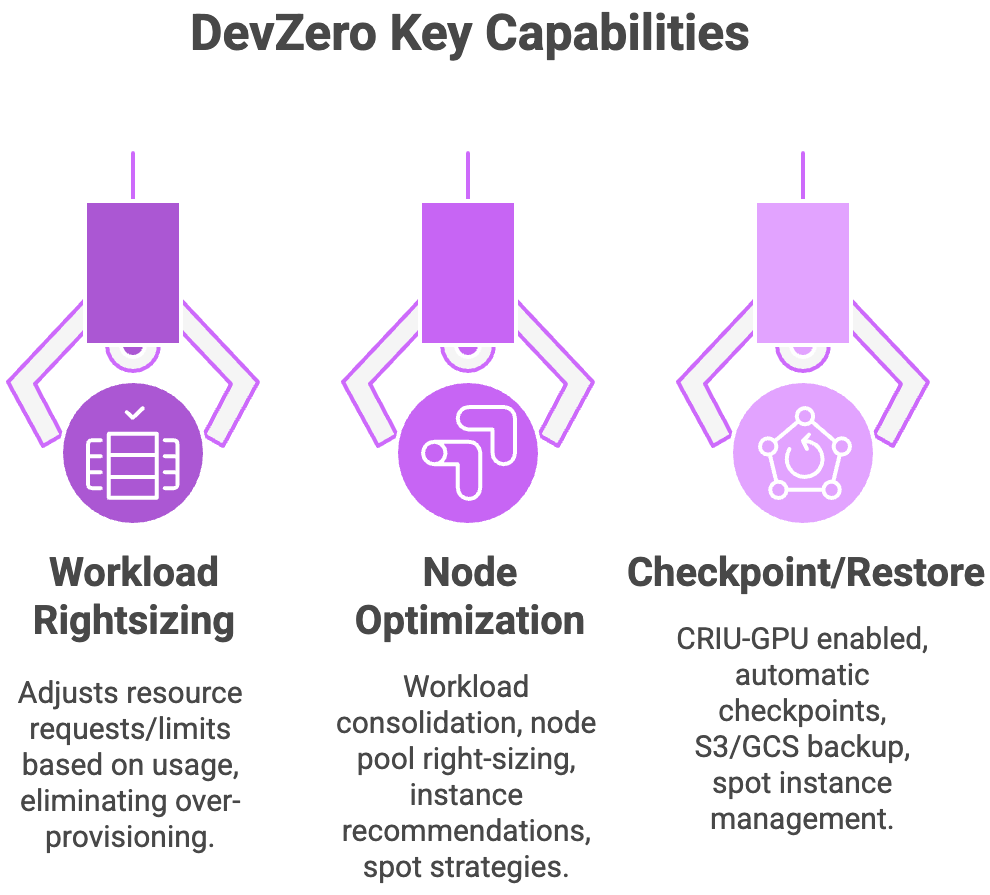

Key Capabilities

Workload-level rightsizing: Automatically adjusts resource requests/limits based on actual measured usage over time, eliminating over-provisioning while maintaining performance buffers.

Node-level optimization: Workload consolidation (bin-packing), node pool right-sizing, automatic instance type recommendations, spot/preemptible strategies.

Checkpoint/restore integration: CRIU-GPU enabled automatically, no code changes required. Automatic hourly checkpoints, S3/GCS backup, spot instance management, automatic restore on interruption, zero-config migration.

Customer Results

AI Startup (Inference-Heavy)

Before: 120 GPUs, 22% utilization, $420K/month.

After 3 months: 45 GPUs, 68% utilization, $145K/month. Savings: $275K/month (65% reduction), 18× first-year ROI.

Enterprise ML Platform (Training-Heavy)

Before: 200 on-demand training GPUs, no checkpointing, 19% utilization, $720K/month.

After: 75 on-demand + 125 spot GPUs, automated checkpointing, 74% utilization, $220K/month. Savings: $500K/month (69% reduction), plus 60% faster training.

Research Institution

Before: 80 GPUs, 82% idle time, no automatic cleanup, $345K/month.

After: 30 GPU shared pool, 2-hour idle timeout, on-demand scaling, $125K/month. Savings: $220K/month (64% reduction), increased researcher satisfaction.

Conclusion

GPU waste in Kubernetes represents one of the most expensive infrastructure optimization opportunities. With 15-25% average utilization and 10-50× GPU cost premium, organizations waste hundreds of thousands to millions annually.

Solutions require both technical and organizational approaches

- Technical: DCGM monitoring, checkpoint/restore, replica right-sizing, appropriate GPU SKU selection, MIG for multi-tenancy, schedule-based autoscaling.

- Organizational: FinOps processes, approval workflows, regular reviews, team-level targets, best practice dissemination.

Organizations taking systematic approaches achieve 40-70% cost reduction, improved application performance, faster innovation, and competitive advantage. As AI/ML grows in importance, GPU efficiency becomes a critical differentiator. Organizations mastering these strategies today position themselves to scale AI infrastructure cost-effectively tomorrow.

Take Action on Your GPU Waste

Don't let another month pass with 75%+ of your GPU budget going to waste. DevZero makes GPU optimization automatic:

- Automated rightsizing across all workloads and node groups

- 40-80% cost reduction achieved by customers in first 90 days

- Zero code changes required - works with your existing infrastructure

Get Started Today

- Sign up for a free 30-day trial with comprehensive waste analysis and ROI projection for your cluster. Start Your Free Trial →

- Phased rollout:

- Week 1-2 monitoring/recommendations

- Week 3-4 non-prod optimization (60% of savings)

- Week 5-8 production optimization

- Week 9+ advanced features.

- Dedicated customer success engineer, weekly reviews, FinOps integration, custom strategies. Schedule a Demo →

.webp)