Kubernetes adoption continues to grow. Red Hat State of Enterprise Open Source 2022 report showed 70% of organizations using Kubernetes. Those numbers are also reflected in the CNCF Annual Survey with a whopping 96% of the respondents reporting that they are either using or evaluating Kubernetes.

But adoption numbers don’t paint the full picture. There is a wide variance in what Kubernetes usage looks like for different organizations. For some, it may be full usage from local clusters to production. For others, there are in a hybrid state with some workloads running in Kubernetes in higher environments with others utilizing Docker and Docker Compose still.

In my experience, most companies fall in the latter camp. Most commonly, production and production-like environments (e.g., UAT, staging) are running fully-featured Kubernetes clusters managed by DevOps and infra teams. Lower environments are typically left for developers to continue using their tool of choice to develop and test.

Problems with the Hybrid State #

Most organizations are hesitant to dive fully into Kubernetes for a myriad of reasons:

- Application developers either lack the expertise or desire to learn enough Kubernetes skills to become proficient.

- DevOps or infra teams are too overstretched to create a great developer experience for local or testing environments.

- Different teams own different environments (e.g., prod owned by production engineering vs. sandbox owned by developer productivity team) with different goals.

Unfortunately, this hybrid state ends up creating more work for everyone involved. The developers have to maintain their set of Docker Compose files and coordinate with DevOps engineers to translate them to Kubernetes manifests for deployment. The divergence in the two environments can cause inconsistencies in testing application behavior beyond functionality. Most commonly, config/secret management and networking differences tend to cause more work to reconcile.

The good news is that the Kubernetes ecosystem has matured a ton over the last few years to make this transition easier. We’ll dive into some possible strategies for your teams.

Kompose #

For teams with significant investment in Docker Compose, the easiest way to transition over to using Kubernetes is via Kompose (Kubernetes + Docker Compose). Kompose is an official Kubernetes project that provides an easy CLI command to convert Docker Compose concepts to Kubernetes manifests:

kompose convert -f docker-compose.yamlCopy

Underneath the hood, Kompose uses a library called compose-go to parse and load Docker Compose files. Not everything maps directly to a Kubernetes manifest, but it does a good job converting most applications. You can look at the conversion matrix for a full list of support.

The downside to this approach is that it outputs vanilla Kubernetes manifests that are harder to reuse or distribute. If infra teams are using other Kubernetes packaging tools such as Helm or Ksonnet, there will still be some work to be done to fully integrate.

Helm #

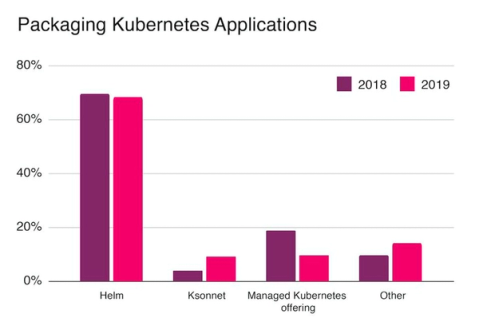

The other option is to standardize on using Helm, which is the most popular way to package Kubernetes manifest from the start.

How Helm stacks up against other forms of packaging K8s applications

Helm provides a nice CLI to bootstrap various Helm charts via

helm create <chart-name>Copy

For most applications, updating the Docker container image/tag, setting the port number for networking, and adding various environment variables are sufficient to get the application running.

For more mature teams, a standard Helm chart can be created to allow developers to use that template instead of starting from scratch. This approach works well for most stateless applications that don't need special storage components.

For a comprehensive dive into Helm, check out: https://levelup.gitconnected.com/helm-101-for-developers-1c28e734937e

Wrapping Up #

Encouraging the developers to use Kompose or Helm alone does not fully solve the issue of standardizing Kubernetes usage across the stack. However, it does provide a good starting point to reduce redundant workstreams of keeping both Docker Compose and Kubernetes manifests up to date. It also helps to kickstart meaningful conversations on how best to give developers a production-like environment whether through a local cluster or remote cluster to abstract away the infrastructure.

DevZero’s solution is able to take your existing Kubernetes artifacts (helm charts, skaffold files, deployment YAMLs) to build templatized development environments. Each developer gets their own namespace with all the relevant apps running inside it. There’s a devpod places in each of these namespaces. When you code in your local IDE, you can now run integration tests against running apps, exactly like if it was in production. With the “pod interception” feature, engineers can redirect traffic from long-chains to a debugger-process directly in their IDEs.

How this impacts engineers:

- Getting end-to-end confidence of shipping new features without having to wait for CI etc

- Not having to test in shared tenancy environments

- Fewer issues detected in production (and not having to reproduce issues on local)

How this impacts businesses:

- Engineering efficiency: Product is shipped faster (with a redacted in lead-time-to-deploy)

- Reduced cloud costs from shared tenancy environments (since DevZero’s environments are disposable and come with configuration-driven cost management)